You've seen the videos of robots dancing and doing backflips? Cool, but until now, it was all spectacle. In 2026, everything changes. Tesla, Boston Dynamics, and Figure AI are all launching their robots into mass production - and this time, it's not just to make a splash on YouTube.

The thing is, AI is finally leaving cloud servers to install itself directly in robots, and it's going to disrupt the global manufacturing industry.

In this article

- The 6 Analog Devices predictions that will change everything

- The humanoid robot race: Tesla vs Boston Dynamics vs Figure AI

- Edge AI: when intelligence gets closer to machines

- Concrete applications arriving in factories

- Advantages and disadvantages of this revolution

- FAQ: Your questions about AI robotics

The 6 Analog Devices Predictions That Will Change Everything

Honestly, when Analog Devices - one of the world leaders in semiconductors - publishes its predictions for 2026, it's worth listening. And spoiler alert: AI is finally going to leave your screen to enter the physical world.

1. Robots that think locally

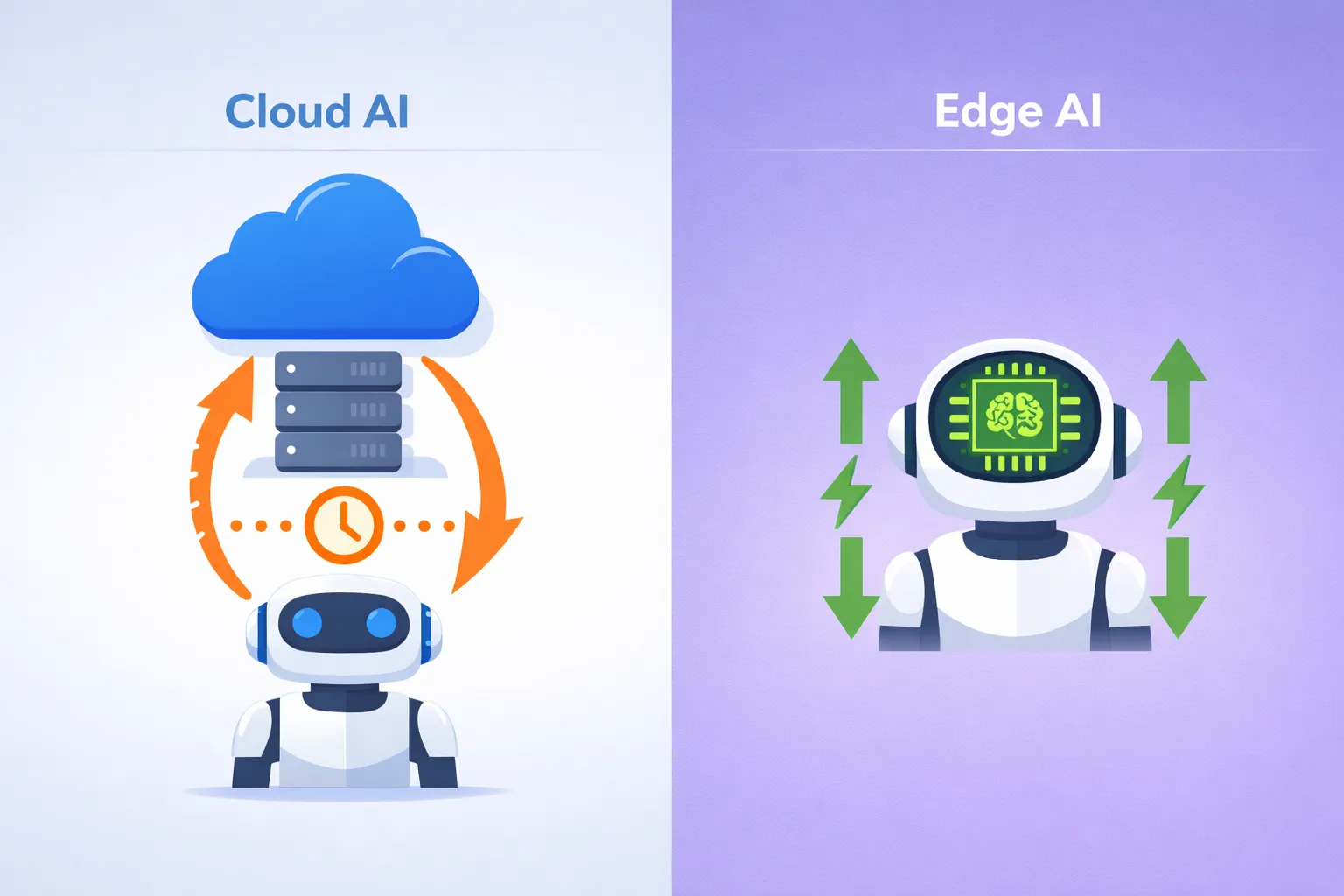

Gone is the model where the robot sends its data to a remote server, waits for a response, then acts. In 2026, decentralized architectures allow robots to make decisions in real-time, without latency. Like a human who pulls their hand away from a hot surface without thinking.

Massimiliano Versace, VP at Analog Devices, explains it well: robots will get closer to biological systems, with local circuits that manage reflexes. Result? Smoother movements and energy consumption divided by 10.

2. The return of analog computing

The thing is, traditional digital processors waste 80% of their energy just moving data. Analog computing directly fuses sensor and computation. For a mobile robot, this changes everything: multiplied battery life, real-time responsiveness.

3. Physical intelligence

AI models will no longer learn only from text and images, but also from vibration, sound, magnetism, and movement. Imagine a robot in a factory that detects a mechanical problem just by "listening" to abnormal vibrations from a machine.

4. Audio as the dominant interface

In 2026, your earbuds and AR glasses become context-aware companions. Not just basic voice recognition, but a true understanding of your sound environment. Gen Z with their "always-in-ear" hearables will love it.

5. Autonomous AI agents

Agentic AI makes decisions and acts without human intervention. Paul Golding from Analog Devices gives a telling example: an agent on the factory floor that detects a machine starting to fatigue, automatically reroutes production to a healthy machine, and coordinates with the supply chain to adjust inventory. All without a human touching anything.

6. Specialized micro-intelligences

Compact but ultra-high-performing models in their domain, capable of running on edge devices. It's the missing link between rigid programmed AI and massive models like GPT-5.

The Humanoid Robot Race: Tesla vs Boston Dynamics vs Figure AI

Game changer: 2026 marks the year when three giants move from prototype to mass production. And the numbers are staggering.

Boston Dynamics: Atlas finally leaves the lab

On January 5, 2026 at CES, Boston Dynamics presents its all-electric Atlas to the public for the first time. Hyundai, which owns the company, announces it wants to deploy "tens of thousands" of robots in its factories.

The strategy? The Software-Defined Factory - a factory where robots can be reprogrammed in days rather than re-engineering the line over years. Unlike the fixed conveyors of traditional automation, Atlas adapts to production changes.

Tesla Optimus: the outsized ambition

Elon Musk never does things by halves. Tesla is currently building a one million unit production line for Optimus. In May/June 2026, they will unveil the Optimus V3, which Musk describes as: "It will seem so real that you'll need to pinch it to believe it's a robot."

The long-term roadmap? Optimus V4 at 10 million units, V5 at 50-100 million. Tesla placed a $685 million order with Chinese supplier Sanhua for actuators. It's serious.

Figure AI: robots building robots

Figure AI takes a radical approach with BotQ, a factory where Figure 03 robots assemble... other Figure 03 robots. Initial capacity: 12,000 robots per year, with a target of 100,000 in four years.

The principle is simple but brilliant: each robot produced increases production capacity. It's a snowball effect. The startup raised $39 billion to get there.

| Company | Robot | 2026 Production | Strategy |

|---|---|---|---|

| Boston Dynamics | Atlas | Tens of thousands | Software-Defined Factory |

| Tesla | Optimus | ~180,000 (estimated) | Integrated mass production |

| Figure AI | Figure 03 | 12,000 | Robots building robots |

Edge AI: When Intelligence Gets Closer to Machines

To be honest, the real revolution isn't the robot itself - it's the AI running inside it. Edge AI moves intelligence from the cloud to the device. And that changes absolutely everything.

Neuromorphic computing explodes

Neuromorphic chips mimic how the human brain works. Where a traditional chip separates memory and computation (and loses 80% energy in transfers), neuromorphic fuses the two.

Result: 80 to 100 times less energy for sporadic tasks (anomaly detection, sensor processing). A vibration sensor can operate for months on battery in an isolated area. With traditional architecture, it would last a few days.

The European Commission is investing heavily, and analysts predict a market takeoff starting in 2026.

Model optimization

Quantization and pruning techniques now allow running models with billions of parameters on edge devices. SmoothQuant, OmniQuant, SparseGPT... these technical names hide a simple reality: powerful AI is no longer reserved for data centers.

Concrete Applications Arriving in Factories

Predictive maintenance: no more surprise breakdowns

Integrated sensors detect vibration, temperature, and acoustic patterns to predict failures before they happen. Schneider Electric reports a 20% reduction in downtime and 15% in maintenance costs. Another study shows 30% reduction in unplanned downtime.

FANUC, a leader in automation, uses edge inference for near-zero unplanned downtime. In the automotive industry, every minute of downtime costs thousands of euros. You can imagine the impact.

Automated quality control

In PCB manufacturing, edge AI enabled a 30% reduction in end-of-line testing with faster defect detection. Vision systems scan for microscopic anomalies in less than a second and decide: accept, reject, or reinspect.

The impact? Lines running twice as fast as manual work, with zero defects shipped.

Dynamic Digital Twins

Digital twins are no longer just 3D models. In 2026, they become living entities that mirror physical robots in real-time, learning and optimizing continuously. Companies that adopt them at scale will see double-digit OEE improvements (Overall Equipment Effectiveness) by 2027.

Advantages and Disadvantages of This Revolution

+ Advantages

- Multiplied productivity: 1 humanoid robot equals 5-10 human workers depending on task complexity

- Eliminated downtime: predictive maintenance removes surprise breakdowns that cost millions

- Flexibility: unlike fixed assembly lines, robots adapt to production changes

- Energy efficiency: neuromorphic chips consume up to 100x less energy

- Safety: dangerous tasks can be delegated to robots

- Disadvantages

- High initial cost: investments in robotics and edge AI remain substantial

- Job disruption: the professional transition will be brutal for some sectors

- Technology dependence: a systemic failure can paralyze an entire production chain

- Complexity: integrating these systems requires specialized skills

My advice

Don't watch this revolution from afar thinking "it doesn't concern me." Even if you don't work in manufacturing, the effects will spread everywhere. Production costs will drop, delivery times will shorten, and new jobs will emerge around maintaining and programming these systems.

If you work in a field likely to be automated, now is the time to train for complementary skills: AI systems supervision, robotic maintenance, industrial data analysis. Companies deploying these technologies will need people to pilot them - and these positions will pay better than those they replace.

Frequently Asked Questions

Will humanoid robots really replace human workers?

Not replace, but transform roles. Hyundai talks about "robotic co-workers" rather than replacement. Repetitive and dangerous tasks will be automated, but supervision, maintenance, and programming will create new jobs. The transition will be difficult for some sectors, but history shows that automation creates more jobs than it destroys - just not the same ones.

When will these robots actually be available?

2026 marks the start of mass production. Boston Dynamics presents Atlas at CES in January, Tesla targets late 2026 to start Optimus production, and Figure AI already produces 12,000 units per year. By 2027-2028, we'll be talking about hundreds of thousands of robots deployed in factories worldwide.

Is Edge AI really more efficient than the cloud?

For real-time applications, yes. When a robot needs to react in milliseconds (avoid an obstacle, adjust a movement), waiting for a cloud response is impossible. Edge AI offers zero latency, works without internet connection, and consumes much less energy. The cloud remains relevant for model training and massive data analysis.

What are the security risks?

Like any connected system, AI robots are vulnerable to cyberattacks. But the decentralized architecture of Edge AI reduces this risk: no single central point to hack. Manufacturers also integrate hardware security mechanisms into neuromorphic chips.

Conclusion

2026 isn't just another year in AI evolution - it's the moment when technology definitively leaves screens to manifest in the physical world. Tesla with its million Optimus units, Boston Dynamics with Atlas at CES, Figure AI with its self-replicating robots... the puzzle pieces are coming together.

The robotics + decentralized AI duo redefines what it means to "manufacture" something. Self-optimizing factories, machines that predict their own breakdowns, robots that learn in real-time from their environment. It's not science fiction, it's next January.

Want to understand how AI is concretely transforming the world of work? Discover our other analyses in AI News.

About the author: Flavien Hue has been testing and analyzing artificial intelligence tools since 2023. His mission: democratizing AI by offering practical and honest guides, without unnecessary technical jargon.